Nodeless EKS Clusters

with Fargate and Karpenter

Sick of updating AMIs on managed node groups? This is how I got rid of them.

Why

I saw three major benefits when I moved to Karpenter.

- Cluster upgrades became a breeze. I could update the EKS control plane. Karpenter would automatically detect drift and roll all of my nodes for me.

- Maintaining compliant AMIs on nodes became automatic. No more waiting for the ASG to roll each node at an agonizing pace. No more 40+ minute terraform runs.

- Cost savings! Instances were continuously right-sized and spot instances could be utilized and managed automatically.

But there was still a problem. I still had one managed node group in every cluster in order to bootstrap Karpenter. I still had to update that managed node group at least once a month to stay ahead of patch compliance goals and it was toilsome.

This is where Fargate came in.

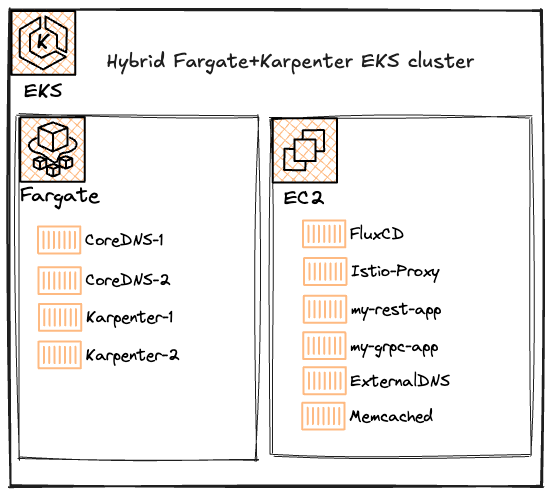

What

- Spin up a new cluster WITHOUT the customary base managed node group.

- Deploy Karpenter and CoreDNS to Fargate in the cluster.

- Deploy Flux, Argo, etc and all of your actual workloads to run on Karpenter-managed nodes.

How

Terraform the cluster

Start by terraforming your cluster with Fargate profiles for CoreDNS and Karpenter.

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 20.22"

cluster_name = local.cluster_name

cluster_version = local.cluster_version

cluster_endpoint_private_access = true

cluster_endpoint_public_access = false

vpc_id = data.aws_vpc.default.id

control_plane_subnet_ids = data.aws_subnets.other.ids

subnet_ids = data.aws_subnets.private.ids

enable_cluster_creator_admin_permissions = true

authentication_mode = "API"

cluster_addons = {

kube-proxy = {}

vpc-cni = {}

coredns = {

configuration_values = jsonencode({

computeType = "fargate"

resources = {

limits = {

cpu = "0.25"

memory = "256M"

}

requests = {

cpu = "0.25"

memory = "256M"

}

}

})

}

}

fargate_profiles = {

kube_system = {

name = "kube-system"

selectors = [

{

namespace = "kube-system"

labels = { k8s-app = "kube-dns" }

},

{

namespace = "kube-system"

labels = { "app.kubernetes.io/name" = "karpenter" }

}

]

}

}

access_entries = {

admin = {

principal_arn = "<Insert your SSO role ARN>"

kubernetes_groups = ["cluster-admin"]

policy_associations = {

single = {

policy_arn = "arn:aws:eks::aws:cluster-access-policy/AmazonEKSClusterAdminPolicy"

access_scope = {

type = "cluster"

}

}

}

}

}

cluster_security_group_additional_rules = {

ingress_self_all = {

description = "Allow Atlantis and VPN to reach control plane"

protocol = "tcp"

from_port = 443

to_port = 443

type = "ingress"

cidr_blocks = ["10.0.0.0/8", "172.16.0.0/12"]

}

}

node_security_group_tags = {

"karpenter.sh/discovery" = local.cluster_name

}

}Terraform IAM and SQS for Karpenter

module "karpenter" {

source = "terraform-aws-modules/eks/aws//modules/karpenter"

version = "~> 20.22"

# fargate only works with IRSA

enable_pod_identity = false

create_pod_identity_association = false

enable_irsa = true

irsa_oidc_provider_arn = module.eks.oidc_provider_arn

irsa_namespace_service_accounts = ["kube-system:karpenter"]

cluster_name = module.eks.cluster_name

node_iam_role_additional_policies = {

AmazonSSMManagedInstanceCore = "arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore"

}

}Add providers for helm and kubectl

provider "helm" {

kubernetes {

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

command = "aws"

args = ["eks", "get-token", "--cluster-name", module.eks.cluster_name]

}

}

}

provider "kubectl" {

apply_retry_count = 5

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

load_config_file = false

exec {

api_version = "client.authentication.k8s.io/v1beta1"

command = "aws"

args = ["eks", "get-token", "--cluster-name", module.eks.cluster_name]

}

}

data "aws_ecrpublic_authorization_token" "token" {}Helm release Karpenter CRDs

resource "helm_release" "karpenter_crd" {

namespace = "kube-system"

name = "karpenter-crd"

repository = "oci://public.ecr.aws/karpenter"

repository_username = data.aws_ecrpublic_authorization_token.token.user_name

repository_password = data.aws_ecrpublic_authorization_token.token.password

chart = "karpenter-crd"

version = "1.0.1"

wait = false

values = [

<<-EOT

webhook:

enabled: true

serviceName: karpenter

serviceNamespace: kube-system

port: 8443

EOT

]

}Helm release Karpenter itself

resource "helm_release" "karpenter" {

namespace = "kube-system"

name = "karpenter"

repository = "oci://public.ecr.aws/karpenter"

repository_username = data.aws_ecrpublic_authorization_token.token.user_name

repository_password = data.aws_ecrpublic_authorization_token.token.password

chart = "karpenter"

version = "1.0.1"

wait = false

values = [

<<-EOT

serviceAccount:

name: ${module.karpenter.service_account}

annotations:

eks.amazonaws.com/role-arn: ${module.karpenter.iam_role_arn}

settings:

clusterName: ${module.eks.cluster_name}

clusterEndpoint: ${module.eks.cluster_endpoint}

interruptionQueue: ${module.karpenter.queue_name}

tolerations:

- key: CriticalAddonsOnly

operator: Exists

- key: eks.amazonaws.com/compute-type

operator: Equal

value: fargate

effect: NoSchedule

controller:

resources:

requests:

cpu: 1000m

memory: 1024Mi

limits:

cpu: 1000m

memory: 1024Mi

EOT

]

depends_on = [

helm_release.karpenter_crd

]

}Add a default NodeClass

resource "kubectl_manifest" "karpenter_node_class" {

yaml_body = <<-YAML

apiVersion: karpenter.k8s.aws/v1

kind: EC2NodeClass

metadata:

name: default

spec:

amiSelectorTerms:

- alias: al2023@latest

role: ${module.karpenter.node_iam_role_name}

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: ${local.env}

securityGroupSelectorTerms:

- tags:

karpenter.sh/discovery: ${module.eks.cluster_name}

- id: ${module.eks_community.cluster_primary_security_group_id}

tags:

karpenter.sh/discovery: ${module.eks.cluster_name}

YAML

depends_on = [

helm_release.karpenter

]

}Add a default NodePool

resource "kubectl_manifest" "karpenter_node_pool" {

yaml_body = <<-YAML

apiVersion: karpenter.sh/v1

kind: NodePool

metadata:

name: default

spec:

template:

spec:

nodeClassRef:

group: karpenter.k8s.aws

kind: EC2NodeClass

name: default

requirements:

- key: "karpenter.k8s.aws/instance-category"

operator: In

values: ["c", "m", "r"]

- key: "karpenter.k8s.aws/instance-cpu"

operator: In

values: ["4", "8", "16", "32"]

- key: "karpenter.k8s.aws/instance-hypervisor"

operator: In

values: ["nitro"]

- key: "karpenter.k8s.aws/instance-generation"

operator: Gt

values: ["2"]

limits:

cpu: 1000

disruption:

consolidationPolicy: WhenEmpty

consolidateAfter: 30s

YAML

depends_on = [

kubectl_manifest.karpenter_node_class

]

}Add a test deployment

resource "kubectl_manifest" "karpenter_example_deployment" {

yaml_body = <<-YAML

apiVersion: apps/v1

kind: Deployment

metadata:

name: inflate

spec:

replicas: 0

selector:

matchLabels:

app: inflate

template:

metadata:

labels:

app: inflate

spec:

terminationGracePeriodSeconds: 0

containers:

- name: inflate

image: public.ecr.aws/eks-distro/kubernetes/pause:3.7

resources:

requests:

cpu: 1

YAML

depends_on = [

helm_release.karpenter

]

}Testing…

Once you’ve run all of the above, you’ll have an EKS cluster running 4 pods (2x CoreDNS, 2x Karpenter) on 4 minimal fargate nodes.

❯ kubectl get nodes

NAME STATUS ROLES AGE VERSION

fargate-ip-10-0-24-69.ec2.internal Ready <none> 17d v1.30.0-eks-404b9c6

fargate-ip-10-0-45-231.ec2.internal Ready <none> 4h2m v1.30.0-eks-404b9c6

fargate-ip-10-0-49-95.ec2.internal Ready <none> 17d v1.30.0-eks-404b9c6

fargate-ip-10-0-57-54.ec2.internal Ready <none> 4h2m v1.30.0-eks-404b9c6

❯ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-5b87ff9b5-64jch 1/1 Running 0 17d

kube-system coredns-5b87ff9b5-ss4wf 1/1 Running 0 17d

kube-system karpenter-666d8f76ff-vr849 1/1 Running 0 4h11m

kube-system karpenter-666d8f76ff-x59tw 1/1 Running 0 4h11mTry spinning up some pods in our test deployment.

❯ kubectl scale deployment inflate --replicas 5

deployment.apps/inflate scaledWithin a few minutes you should see Karpenter spin up a new node. Daemonset pods for that node should show up as well.

❯ kubectl get nodes

NAME STATUS ROLES AGE VERSION

fargate-ip-10-0-24-69.ec2.internal Ready <none> 17d v1.30.0-eks-404b9c6

fargate-ip-10-0-45-231.ec2.internal Ready <none> 4h4m v1.30.0-eks-404b9c6

fargate-ip-10-0-49-95.ec2.internal Ready <none> 17d v1.30.0-eks-404b9c6

fargate-ip-10-0-57-54.ec2.internal Ready <none> 4h4m v1.30.0-eks-404b9c6

ip-10-0-104-83.ec2.internal Ready <none> 57s v1.30.2-eks-1552ad0

❯ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default inflate-66fb68585c-drc69 1/1 Running 0 92s

default inflate-66fb68585c-lvlxl 1/1 Running 0 92s

default inflate-66fb68585c-qf42p 1/1 Running 0 92s

default inflate-66fb68585c-qmz5j 1/1 Running 0 92s

default inflate-66fb68585c-wqn6n 1/1 Running 0 92s

kube-system aws-node-5rkkl 2/2 Running 0 67s

kube-system coredns-5b87ff9b5-64jch 1/1 Running 0 17d

kube-system coredns-5b87ff9b5-ss4wf 1/1 Running 0 17d

kube-system karpenter-666d8f76ff-vr849 1/1 Running 0 4h5m

kube-system karpenter-666d8f76ff-x59tw 1/1 Running 0 4h5m

kube-system kube-proxy-z2tbk 1/1 Running 0 67sFinish the test by spinning the deployment back down. If you’ve deployed nothing else, the Karpenter node will disappear within minutes as well.

❯ kubectl scale deployment inflate --replicas 0

deployment.apps/inflate scaledWhats next?

Go bootstrap Flux or ArgoCD on your new cluster and watch Karpenter spin up new nodes to accommodate them. Then maybe drop some more nuanced NodeClasses and NodePools into your GitOps repo.

Lets talk about cost

Its true that four minimal Fargate nodes will still costs tens of dollars more per cluster per month than your old managed node group. But that is a drop in the bucket compared to what you’ll save on maintenance.

Notable Updates

Things I’ve changed since publishing…

- Added label selectors to fargate profile

- Added primary cluster SG ID to karpenter node class (this ensures pods on karpenter nodes can hit coredns on fargate nodes)

- Increased requests/limits for Karpenter